The Helmholtz Decomposition Theorem

In the previous lesson, we discussed two important integral theorems in vector calculus, Stokes’ theorem and the divergence theorem.

In this lesson, we’ll walk through another important theorem that has more do with differential operators; the Helmholtz decomposition theorem.

In fact, the Helmholtz decomposition theorem is often called the fundamental theorem of vector calculus because of its importance (especially to physics).

Now, I’ve previously mentioned that any curl-free vector field can be expressed as the gradient of a scalar potential and any divergence-free vector field can be expressed as the curl of a vector potential (the proof for these is found in the problem set):

These are, however, only special cases of vector fields.

The Helmholtz decomposition theorem addresses the question of whether there exists a similar way of expressing a general vector field that isn’t necessarily curl-free or divergence-free.

The Helmholtz decomposition theorem states that any general vector field, under certain restrictions, can be expressed in terms of a scalar potential (curl-free) part and a vector potential (divergence-free) part:

This theorem is extremely powerful in the sense that it allows us to essentially decompose any vector field into a scalar potential and a vector potential. This is called the Helmholtz decomposition of a vector field.

This has significant importance in for example, electrodynamics (which we’ll discuss later).

Lesson Contents

Can a Vector Field Be Described Completely By Its Divergence and Curl?

Another way of looking at the Helmholtz decomposition theorem is by asking the question; given that we know both the divergence and curl of a vector field, can we uniquely determine the vector field itself?

The answer to this actually turns out to be yes. Now, there are some restrictions on when exactly this works, which I’ll explain later in this lesson.

The importance of being able to uniquely determine a vector field simply by knowing its divergence and curl is extremely significant.

Take for example, the Maxwell equations from electrostatics; these are equations that give you the divergence and curl of the electric and magnetic field, given a particular charge and current distribution.

Therefore, knowing the divergence and curl allows us to uniquely determine these fields.

Derivation of The Helmholtz Decomposition Theorem

Next, I want to begin “developing” an approach of finding the Helmholtz decomposition of a vector field (i.e. its scalar and vector potential) given its curl and divergence.

The way we’ll do this is essentially deducing the process for doing this by using some concepts in vector calculus (as well as some new things, which I’ll explain along the way).

Now, the first thing we’ll do is make the assumption that the Helmholtz theorem makes in the first place; we assume that a general vector field can be written in the following form:

Let’s then take the divergence of this:

This will be our first equation, so keep this one at the back of your mind. Let’s now take the curl of the original vector field, which gives us:

We can now use an identity from vector calculus, which states that the curl of a curl can be expressed as:

So, we have the curl of our vector field being:

This can be simplified even further. Here’s where we’ll do a little “magic trick” called a gauge transformation.

A Brief Introduction To Gauge Transformations

So, we now have the curl of our vector field expressed in terms of the vector potential as:

Now, the thing about the vector potential is that it is not unique; in other words, there are an infinite number of possible vector potentials that we could use to express our original vector field in terms of.

In particular, we can always add the gradient of any scalar function to our vector potential and it would still work as a valid vector potential.

We can see this if we “transform” our vector potential by adding to it the gradient of an arbitrary scalar function φ:

However, the original vector field, according to Helmholtz’s theorem, remains unchanged:

This kind of “transformation” that essentially leaves everything unchanged is called a gauge transformation.

Here we made the gauge transformation of adding a gradient of a scalar field to the vector potential. The scalar field here would be called a gauge field.

Gauge transformations are incredibly important in modern physics, such as in quantum field theory. It turns out that gauge fields in quantum field theory describe certain particles known as gauge bosons (such as photons).

Anyway, the point of a gauge transformation for our purposes is that we can essentially choose any vector potential up to the gradient of a scalar field. Choosing some particular gauge field is called gauge fixing.

We can actually use gauge fixing to simplify our lives. In particular, we will choose a gauge such that the Laplacian of this gauge field is the negative divergence of A:

Now, why would we want to do this? Well, this has the particular property of making the divergence of our vector potential zero. We can see this by taking the divergence of this new vector potential:

So, we’ve now chosen a gauge such that:

Going back to our equation for the curl of the vector field, this will reduce to:

So, just to recap what we did here; since the vector potential is not unique, we can always choose it in some form without any loss of generality (this is called gauge fixing). Here we chose the gauge such that the divergence of the vector potential is now zero.

Poisson’s Equations

It is now time to put all of the pieces together. We’ve now derived equations for both the divergence and curl of a general vector field in terms of the scalar and vector potentials:

The whole purpose of this was to develop a method for reconstructing a vector field simply by knowing its curl and divergence. Well, here we have exactly it!

To further explain this, let’s say that we know the curl and divergence of a vector field. So, these are some known scalar d and some known vector c:

Then, by the equations from above, we have:

The right-hand sides of these equations are known given that we know the curl and divergence of our vector field. Therefore, we can solve for the scalar and vector potentials from these two equations.

These are called Poisson’s equations for the scalar potential and vector potential, respectively. These are essentially second order partial differential equations, which we can see by writing out the Laplacian operator in Cartesian coordinates, for example:

Moreover, this second equation is actually three Poisson’s equations in disguise; one for each vector component, which would be:

Now, to actually solve these Poisson’s equations in practice will require some knowledge about the theory of PDE’s (partial differential equations) and in most cases, solving these is quite a difficult task.

However, the point of this is the fact that given that we know the divergence and curl of a vector field, we can indeed deduce the vector field itself by solving Poisson’s equations (at least theoretically speaking).

Then, given the solutions to Poisson’s equations (i.e. the scalar and vector potentials), we can reconstruct the full vector field by the Helmholtz decomposition theorem:

Finding The Helmholtz Decomposition of a Vector Field (A Step-By-Step Approach)

Essentially, finding the Helmholtz decomposition of a vector field amounts to finding a scalar potential and a vector potential that define the vector field.

We’ll discuss later the question of why exactly you’d want to do this, but knowing the scalar potential and the vector potential of a vector field are extremely useful in certain situations.

Now, the thing about these potentials is that they are not unique. In other words, we can find multiple scalar and vector potentials for a single vector field.

That being said, the Helmholtz decomposition can be used to find a vector field given that we know its curl and divergence.

In this case, the uniqueness of the potentials is not really an issue since they are merely just mathematical tools to construct the vector field.

It’s also possible to find multiple vector fields with the same curl and divergence without further restrictions on the vector field itself. We’ll discuss this “non-uniqueness” issue later and how to fix it.

However, let’s first look at the general method of how we can construct a vector field from its curl and divergence, be this vector field unique or not.

Below you’ll find a step-by-step method for this, which uses essentially the stuff we derived earlier.

- Given the divergence and curl of a vector field, construct Poisson’s equations for the scalar potential and the vector potential. So, if we know the divergence d and the curl vector c, write down Poisson’s equations:

- Solve Poisson’s equations for the scalar potential Φ and the vector potential A. Generally, there will be infinitely many possible solutions, but any particular solution will work.

- Construct the vector field using the scalar and vector potentials. To do this, use the Helmholtz decomposition theorem, which states that any vector field can be expressed as:

- You then have your vector field constructed simply out of its curl and divergence! Note, however, that in general, the answer is not unique (but it can be made unique; this is discussed later).

Now, the difficult part in all of this is to actually solve Poisson’s equations.

The easiest way to actually solve them is to just guess the solutions, which works very well given that the curl and divergence are somewhat simple functions.

Generally, however, this is not going to give you a unique solution, only one possible solution (to get a unique answer you would generally have to solve the PDE with boundary conditions).

However, one possible solution for the scalar potential and one possible solution for the vector potential are enough to construct a particular vector field with a specific curl and divergence.

Below you’ll find a practical example of how exactly we can find a vector field from its curl and divergence.

So, say we know that the curl and divergence of a given vector field are:

We now want to find the actual vector field itself. Now, these are actually simple enough to be able to just guess what the vector field would be, but the better and more general way would be to apply the steps given above.

So, we have the divergence and curl as:

Let’s begin by writing down Poisson’s equation for the scalar potential:

Here, it’s simple enough to just guess a solution. One particular solution will be:

Again I want to stress that this is NOT the only solution, it’s just one possible solution (which you can check by calculating the partial derivatives of this).

Now, that’s our scalar potential. For the vector potential, we have Poisson’s equation in the form:

We can further expand this in terms of a Poisson equation for each component of the vector potential A. However, we can see here that nothing depends on z, so we can right away just guess that a valid solution for the z-component will be:

For the x-component, we have the equation:

Again, we can just guess that one possible solution is:

For the y-component, we have the equation:

This has a solution as:

Now, combining these components, our vector potential is then:

Using this and the scalar potential we calculated before, the gradient of the scalar function and the curl of the vector potential are:

Using these, the vector field F is then:

So, we’ve successfully reconstructed the vector field from just its curl and divergence. You can check that this is indeed a valid solution by taking its curl and divergence, however, this is not the only valid solution; we could add certain stuff to this and it would still have the same the curl and divergence.

Moreover, there are many choices for the scalar and vector potentials here that give the exact same vector field.

However, if we only care about finding a particular vector field given its curl and divergence (which in many cases, will still be a useful thing to do), then the potentials don’t really matter; they are simply just mathematical tools for solving the problem.

Also, I know this example was basically just a bunch of guesswork, but actually solving it properly will require knowledge in the theory of PDE’s, which is not the topic of this course or lesson.

This example simply highlights the overarching point; given the curl and divergence of a vector field, it is possible to find the full vector field. This may or may not be an easy problem, but it can be done. It can also be done uniquely under certain restrictions.

Restrictions For The Helmholtz Decomposition Theorem

The example above nicely illustrates the fact that we CAN reconstruct a vector field simply from its divergence and curl.

In the example, we had been given a divergence and a curl and we deduced from these the vector field itself:

There is just one problem; the above example is only one possible choice for the vector field.

To illustrate this, try to add the following vector field to the vector field F given above:

Now calculate its curl and divergence. You’ll find that it has exactly the same divergence and curl, but the vector field itself is certainly not the same vector field, far from it.

In general, if we have a certain solution (F1) to our problem (finding a vector field given its curl and divergence), we can always add to it the gradient of any harmonic scalar function and this will generally result in another valid solution (F2) that has the exact same curl and divergence:

A harmonic function φ is one that satisfies the so-called Laplace’s equation, i.e. it has zero Laplacian:

The reason that we can add the gradient of a harmonic function is that this will always have both zero curl and zero divergence. Therefore, the curl and divergence of the original vector field is left unchanged and thus, it is also a valid solution to our problem.

We can see this by considering the following; let’s assume that the curl and divergence of a vector field F1 are as follows:

Let’s now say we’ve found a solution, i.e. we’ve found the vector field F1. Now we’ll add to it the gradient of a harmonic function to get some other vector field:

Let’s take the curl of this, which will give us:

Okay, so this new vector field has the exact same curl. Let’s also check its divergence:

Since φ here is a harmonic scalar field, its Laplacian is zero and we have:

So, F2 has the exact same curl and divergence as F1, our “original” solution. Thus, F2 is also a perfectly valid solution, but in general, it’s a completely different vector field.

Now, you could repeat this process and add however many gradients of harmonic functions to this solution if you wish and you’d still have a solution. Therefore, we can practically have an infinite number of vector fields as solutions that may be vastly different from one another.

The problem now is that, okay, we can find a vector field from just its curl and divergence. But generally speaking, there are an infinite number of possible vector fields that would satisfy this that may be totally different from one another.

So, is there any point in all of this? Sure, we can find examples of vector fields with a certain curl and divergence, but none of these are uniquely defined.

However, there is a way to fix this, which is to assume that the vector field vanishes (goes to zero) far away from the region we’re interested.

This doesn’t really change the properties of the vector field in the region we’re interested in studying, but it does allow us to find a unique solution.

In other words, by assuming that the vector field vanishes far away, we now have only one possible choice for a vector field with a given divergence and curl!

To see this, consider again a solution F1 to our problem and then another general solution F2, which will differ by the gradient of a harmonic function:

By making this assumption, the fields are both F1=0 and F2=0 far away, so we get:

So, what we find by making such an assumption is that this fixes the value that this ‘gradient of a harmonic function’ can have; it has to be zero!

Therefore, the solution F1 is actually the only possible solution. So, by making the vector field vanish far away, we can uniquely define the vector field from its curl and divergence.

Note that we can also add any constant to the vector field and in general, this would still be a valid solution. However, this assumption of the field vanishing far away also fixes that constant to necessarily be zero.

Of course, there will still be multiple choices for the scalar and vector potentials, but the vector field itself is uniquely defined.

Here are some little technicalities relating to this:

- When we say that a vector field “vanishes far away”, technically speaking we’re saying that the vector field goes to zero at infinity IF the vector field is defined everywhere in space.

- However, if the vector field is only defined in some particular (not infinite) region, then “vanishes far away” simply means that the vector field goes to zero at the boundaries of that region.

- A further technicality is that the vector field has to go to zero faster than 1/r=1/√(x2+y2+z2) in Cartesian coordinates. The mathematical way to state this boundary condition is:

Really, these boundary conditions come from the fact that the solutions of the vector field are fundamentally found from differential equations (Poisson’s equations) and generally, a unique solution to a differential equation can only be found if boundary conditions are specified.

Now, you may want to ask; doesn’t this boundary condition limit the possible vector fields we can uniquely construct from its curl and divergence?

Well, the answer to this is yes it does, absolutely.

For example, the vector field from the example earlier is not unique at all and it is impossible to find a vector field of that form which would be unique (since there is no way for this vector field from the example to be zero at infinity).

General Solutions To Poisson’s Equations

In practice, the boundary condition discussed above is used when solving Poisson’s equations for the potentials.

In fact, with this boundary condition, it is possible to derive a general solution to Poisson’s equations (with certain boundary conditions also imposed on the potentials, namely that they also go to zero at infinity).

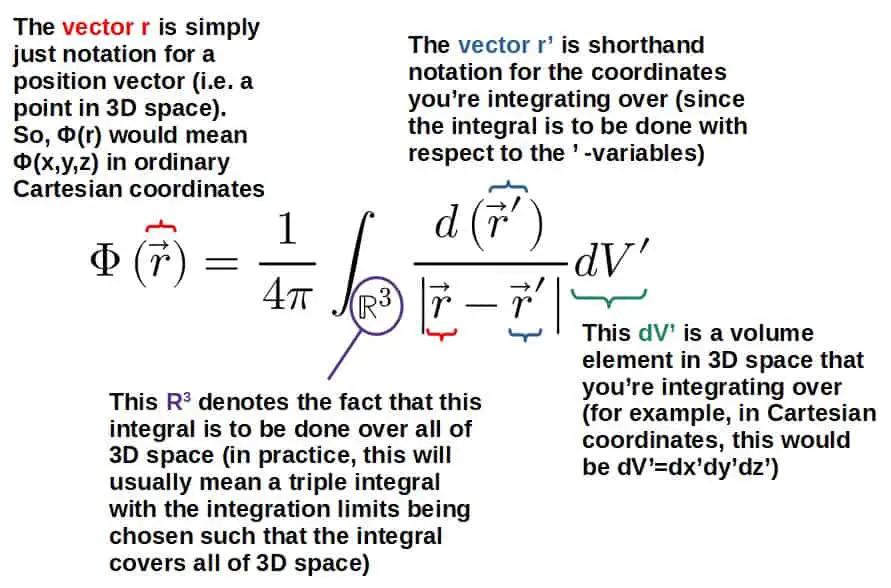

These general solutions look as follows:

These may look complicated and in general, these will be somewhat difficult integrals to calculate. However, we can still understand what all the pieces in these solutions represent:

Below, you’ll also find an example of using these general solutions to calculate the electric and magnetic fields of a point charge as well as a moving point charge.

The really interesting thing about this is that the typical 1/r2-dependence of the electric field is simply a result of a deeper mathematical principle, namely the Helmholtz decomposition theorem and these general solutions of Poisson’s equations.

To get some intuition for what we’re going to do, let’s first begin by calculating the electric field of a simple stationary point charge q sitting at the origin.

The charge distribution of such a charge is going to be given by:

This δ-function here is called the Dirac delta function and essentially, it’s just a function defined as:

In other words, this charge distribution simply tells us that the charge density is zero everywhere except at the origin where the charge q is located at, where the charge density will be infinitely large (since we’re basically looking at the density at exactly where the point particle is located at).

A nice property of the Dirac delta function is that if you integrate any function with it (over all of space), the delta function “picks out” the value of the function at the point where the argument of the delta function is zero (so, at r=0 in our case):

Anyway, getting back to our calculation, we’ll now use Gauss’s law for the electric field, which states the following:

This gives us the divergence (d) of our vector field (the electric field) if we also insert the charge density function:

The electric potential is then given by the general solution described earlier:

This is actually the simplest integral we could possibly do. The Dirac delta function simply picks out the value of our integrand at the point r’=0, so we just get:

So, that’s our electric potential. Now we’re going to calculate the electric field from this by using the formula for the gradient in spherical coordinates:

Now, you could of course just convert the potential to Cartesian coordinates by using r=√(x2+y2+zz), then calculating the gradient by using the standard formula for the gradient in terms of x, y and z, but this just becomes unnecessarily complicated.

Anyway, the electric field is then:

This is, of course, the standard formula for the electric field of a point charge.

The cool thing about this, in my opinion, is that this formula is mostly just a mathematical consequence of the Helmholtz decomposition (and Maxwell’s equations, of course).

The magnetic field of this stationary point charge is just zero as magnetic fields are only generated by moving charges (currents).

A more interesting example of applying the solutions to Poisson’s equations and the Helmholtz decomposition comes from calculating the magnetic field of a point charge that is moving.

Now, we’ll assume that the charge q is moving with a constant velocity that is not too fast (I’ll explain what this means later).

The reason for these assumptions is that otherwise we would have to deal with possible relativistic effects as well as radiation fields created by the acceleration of the charge, which would make our calculations very complicated (although this can actually be done by using something called the Liénard–Wiechert potentials).

Anyway, the current distribution of this moving point charge is given by:

This describes the current density of the point charge located at r=0 and its velocity given by the v-vector (the components of this vector are just constants).

Similarly to the charge density in the previous example, the current density is zero everywhere except at the point r=0 where the moving charge is. This is what the Dirac delta function describes here.

Here we’re essentially imagining that the moving charge is always located at r=0. This means that we’re looking at the situation from a coordinate system that is “attached” to the moving charge.

Anyway, we can now use the Ampere-Maxwell law to get the curl of the magnetic field (note; since we’re assuming that the velocity is slow, we can ignore the ∂E/∂t -part of this law):

This is our c-vector (the curl of B), which we get by inserting the current density function:

The magnetic vector potential can then be calculated from this by using the general solution to Poisson’s equation:

The Dirac delta function will once again, pick the value of the integrand at the point r’=0, so we just get:

So, this is our magnetic vector potential for a moving point charge with constant velocity. The magnetic field can then be obtained from this by taking the curl:

Here we can make use of the “product rule” for the curl of the product of a scalar field and a vector field (the scalar field being this µ0q/4πr and the “vector field”, which is really just a constant vector, being the velocity v):

Applying this to the B-field, we have:

Now, since we assumed from the start that the velocity is constant (neither its magnitude nor its direction changes), its curl must be zero:

As a result, the magnetic field is:

Let’s calculate this gradient by applying the gradient formula in spherical coordinates (for a function that only depends on r):

With this, we finally get the magnetic field generated by a moving charge q with constant velocity v (at the distance r from the moving charge):

Now, this magnetic field always points in the direction perpendicular to both the r-hat vector (which is a unit vector pointing from the charge to the point where the field is measured at) and the velocity vector.

This tells you that the magnetic field “circulates” around the moving charge and that there is no magnetic field along the line of motion of this charge.

Now, even though the general solutions of Poisson’s equations are somewhat complicated in a lot of cases, the main point of all this is that, given certain boundary conditions, a vector field itself can be uniquely reconstructed purely out of its curl and divergence.

This is an incredibly powerful fact and combined with the fact that we can also solve Poisson’s equations in the general case with these boundary conditions, we’ve essentially just solved a huge class of problems regarding vector fields.

Another nice thing is that most vector fields in physics, for example, do have this property of vanishing at infinity, so essentially the Helmholtz decomposition theorem works very well in physical applications.

Next, we’ll actually look at some more examples of where the Helmholtz decomposition theorem can be applied, particularly for physics.

Before moving on, what you should really take away from this is the following; the Helmholtz decomposition theorem essentially consists of two things; any vector field that satisfies the specific boundary conditions (stated earlier) can be decomposed into a curl-free and a divergence-free part. Moreover, any vector field can be uniquely reconstructed simply by knowing its curl and divergence, given that it satisfies certain boundary conditions. This theorem is, in fact, so powerful that it is often called the 'fundamental theorem of vector calculus'. The importance of this theorem to physics, for example, is huge and you'd indeed be surprised how many areas of physics from electrodynamics, fluid dynamics to even quantum field theories actually use this theorem without explicitly stating it.

Applications of The Helmholtz Decomposition Theorem

The Helmholtz decomposition theorem is especially important for physics, since most physical vector fields (gravitational fields, electric and magnetic fields, flow velocity fields etc.) are often assumed to vanish at infinity.

This then means that these fields can be uniquely determined only from their curl and divergence.

This can be applied, for example, in electrostatics where the Maxwell equations are essentially four equations that determine the curl and divergence of the electric and magnetic fields, given a particular charge and current distribution:

In fact, the Helmholtz decomposition theorem gives you some deep insights into why the Maxwell equations look exactly the way they do; they are equations for the curl and divergence of the electric and magnetic field, because this is ALL we need to know to completely specify the fields themselves.

In the general case, if the fields are also taken to be time-dependent, then the electric and magnetic fields are described using electrodynamics (instead of electrostatics).

In any case, the electric and magnetic fields can be decomposed into their Helmholtz decompositions (in terms of the electric scalar and vector potentials and the magnetic scalar and vector potentials):

Using the general Maxwell equations, it’s possible to impose some restrictions on these, namely for the magnetic scalar potential and the electric vector potential (this is explained down below):

This, in fact, eliminates the magnetic scalar potential and the electric vector potential completely.

Therefore, ALL electric and magnetic fields can be described in terms of an electric scalar potential and a magnetic vector potential:

The explanation for where these come from is purely a consequence of the Helmholtz decomposition theorem and Maxwell’s equations. You’ll find a derivation of these down below.

We’ll begin by using the Helmholtz decomposition of the electric and magnetic fields:

Let’s begin by looking at the magnetic field. In particular, we’ll apply Gauss’s law for the magnetic field (one of Maxwell’s equations), which states that the divergence of the magnetic field is always zero:

Inserting B in terms the potentials into this, we get:

So, the magnetic scalar potential is therefore a harmonic function (its Laplacian is zero).

However, when we applied the Helmholtz decomposition, we’ve already implicitly made the assumption that the magnetic field should vanish at infinity.

This means that the gradient of any harmonic function we add to our vector field is, by assumption, zero (we discussed this earlier). Therefore, since the magnetic scalar potential is a harmonic function, its gradient here should be zero and we have the condition:

That’s the first restriction we have. Using this, the magnetic field is written as:

Let’s now use Faraday’s law for the electric field, which describes the curl of the electric field in terms of the time derivative of the magnetic field:

Now, inserting the magnetic field we just derived as well as the Helmholtz decomposition for the electric field, this becomes:

On the left-hand side, the curl of the gradient will be zero. On the right-hand side, we can move the time derivative to act on A (since it’s a partial derivative and the curl operator does not “depend” on time). We then have:

Since both of these have a curl acting on something, it must be generally true that the things inside of the parentheses are equal:

That’s the restriction we have for the electric vector potential K, which means that we can express the electric field in terms of the magnetic vector potential:

This also completely eliminates the need to have an electric vector potential, which is why you’ve probably never even heard of such a thing.

Now, in terms of the physical applications of the Helmholtz decomposition, we’ve only stated that it can be done for the electric and magnetic fields, for example. However, another question is why you would want to do so.

The simple answer is that, in many cases, it is easier to deal with potentials instead of the vector fields themselves.

We can always do this because knowing the potential immediately allows us to calculate the field, so we can always choose whether we want to work with the potential or with the field itself.

Especially the electric potential is often very useful as this is a scalar field (scalar fields are often much easier to deal with than vector fields).

For example, the electric potential can be used to directly compute the work done or the voltage between two points, while doing the same thing from the electric field itself is a more difficult task.

So, as a high-level point, the Helmholtz decomposition theorem gives us a way to choose whether we want to work with a vector field itself or with its potentials. Both ways are equivalent, but sometimes one is easier than the other.

The Hodge Decomposition Theorem

One more thing to briefly address is the question of what exactly happens if we drop our assumption of the vector field vanishing at infinity.

In this case, we can only build the Helmholtz decomposition of the vector field up to the gradient of a harmonic function.

Based on this idea, any vector field (not just one that vanishes at infinity) can be built from the typical Helmholtz decomposition with an additional harmonic part. This is called the Hodge decomposition theorem and it can be stated as follows:

This H here is a “harmonic vector field”, which simply just means that it is the gradient of some harmonic function:

A harmonic vector field will always have both zero divergence and zero curl:

The above way to express any vector field is called the Hodge decomposition of a vector field; it “decomposes” any vector field into a curl-free, a divergence-free and a harmonic part.

Another way to write the Hodge decomposition of a vector field is:

Now, if the Hodge decomposition seems a little pointless (since it basically appears to be just another way of stating Helmholtz’s theorem), it is actually far from it.

In fact, the Hodge decomposition theorem is a generalization of the Helmholtz decomposition and far more applicable than the Helmholtz decomposition.

This is because the Hodge decomposition can be directly applied in the study of differential geometry and to tensor fields.

Intuitively, the idea is the same; a differential form or a tensor field can be decomposed into a “divergence-free”, a “curl-free” and a “harmonic part”.

Mathematically, however, this will require the use of various more sophisticated differential operators, such as exterior derivatives, but the basic intuitive picture remains the same.

Lesson Summary

There has been quite a bit of things covered in this lesson, so a summary of the key concepts may be useful:

- The Helmholtz decomposition theorem states that any vector field can be decomposed into a curl-free and a divergence-free part in terms of a scalar potential and a vector potential.

- The Helmholtz decomposition is unique given that the vector field vanishes far away.

- Using the Helmholtz decomposition theorem and appropriate boundary conditions, it’s possible to uniquely construct a vector field just from its curl and divergence.

- This is done by solving Poisson’s equations for the scalar and vector potential.

- The Helmholtz decomposition theorem has applications in practically every area of physics and it’s one of the most important theorems in vector calculus.

- The Helmholtz decomposition theorem can be generalized even further as the Hodge decomposition theorem.